|

Three years later I still think there is an inconsistency, but a Random Number Generator is not a good example to illustrate it. A Random Number Generator does not describe a particular random string at all, and therefore cannot be the shortest description of a particular random string. It describes the set of all random strings. The RNG is not a reproducible description of a particular string, because every generated string is unique. This is even truer for a true random sequence based on radioactive decay or any other natural source of true randomness (8).

However there are other systems like π (pi) and cellular automata (9) that reproducibly and uniquely describe an arbitrary long random sequence. The paradox is that the length of these simple algorithms tells us nothing at all about the 'information content' or complexity of the sequence they produce/describe because the 'information content' can have any value depending on the length of the output. Since π infinite length, its information content should be infinite.

Information and Meaning

The kind of 'information' produced by a mindless computer program or a natural, physical mindless process

is cheap, worthless, meaningless information. Just let it run forever and it produces an endless stream of 'information'! That is not what humans call information. There is the real paradox.

Another way of stating the paradox is: a long random string of letters has a higher Information Content then a book of the same length. This is so because a random string is hardly compressible at all, while the content of a book can easily be compressed (as everyone knows who uses PC compression programs as pkzip, winzip, etc). In fact a random string has the maximum amount of information. This definition of information was not invented by Paul Davies, but by Shannon, Chaitin and Kolmogorov.

The next 3 blocks, a grey block containing 'information', the next with some noise added, and one with the maximum amount of noise added,

show an increasing information content according to the mathematical definition:

increasing

mathematical

information

content |

3.712 bytes |

9.728 bytes |

11.394 bytes |

increasing

meaningful

information |

no

information |

degraded

information |

information |

| Information content (same 3 jpg images are used in both rows) |

The 'information content' is reflected in the size of the jpeg files, because jpeg is a compression algorithm (all three have 50x75 pixels and have the same compression factor [14]).

So the ranking of human information (books) and random strings (noise) on the basis of the compressibility criterion yields a result clearly contradicting common sense knowledge. The point is that the word 'information content' is misleading outside the mathematical context. I propose to call that kind of Information Content just what it is: compressibility and nothing more.

What is the difference between a random string and a book? The words in a book are carefully selected for their meaning. It should be no surprise that the compressibility definition of Information Content yields such paradoxal results, because compressibility ignores the meaning of the symbols.

Another New Scientist reader Tony Castaldo wrote:

"It is only opposite if the book is going to be used by a human for human purposes, and of course everyday usage (in your world) is going to be about humans for human purposes. For a computer, the book would have lower information content."

However a software program written in Fortran, C++, Visual Basic, etc would have a lower Information Content than a random string.

I now list a number of authors pointing out the difference between information and meaningful information.

Hubert Yockey

"I pointed out above in the discussion about finding a definition and a measure of 'information' that the mathematical definition does not capture all the meanings that are associated intuitively with that word. By the same token, the Kolmogorov-Chaitin complexity may likewise not capture all that one thinks of in the word 'complexity', nevertheless, it will prove very useful in molecular biology"

[12].

John Maynard Smith

"Information theorists use the phrase 'information is data plus meaning'" [11].

This is exactly what I mean. But who are those 'information theorists'?

Radu Popa

"Shannon's information theory is purely mathematical and makes information oblivious to function, whence it cannot distinguish between meaningful (instructional) signals and noise." [13]

Richard Feynman

"How can a random string contain any information, let alone the maximum amount? Surely we must be using the wrong definition of "information"? But if you think about it, the N-bit strings could each label a message ..." [3]

Labels! Those random strings are labels, not messages! Without label each random string is just a random string and nothing more. Labels create meaning. Those random strings could be labeled in many ways. A code table must be created such as the ASCII code table or the morse code table or the genetic code table. But then there is information in the code table. This type of information must fall outside the original set of random strings.

Ian Stewart

People talk an awful lot of nonsense about DNA as "information" for an organism - as if information has any meaning outside of a well-defined context. [...] However, what's really important about a message is not the quantity of information, but its quality. "Two plus two makes seventeen" is longer than "2+2=4" but is also nonsense.

[4]

Warren Weaver

"The word information, in Shannon's theory, is used in a special sense that must not be confused with its ordinary usage. In particular, information must not be confused with meaning..." [5]

Paul Davies

"All random sequences of the same length encode about the same amount of information, but the quality of that information is crucial..." [6]

The helpful insight of Davies is that meaningful information must be a very specific and small subset of all random sequences. However: how do we define 'quality'? How do we define the subset?

James Gleick

"The birth of information theory came with its ruthless sacrifice of meaning–the very quality that gives information its value and its purpose."

from The Information A History, a Theory, a Flood (2011) quoted by Andrew Robinson in Science 30 Sep 2011:

"Introducing his The Mathematical Theory of Communication, the founder of information theory, Claude Shannon, bluntly declared meaning to be "irrelevant to the engineering problem".

Jim Crutchfield

Later I discovered that Crutchfield noted the above-mentioned paradox: pure randomness produces a high Shannon information quantity and also a high Kolmogorov algorithmic complexity.

"Information theory as written down by Shannon is not a theory of information content. It's a quantitative theory of information."

Crutchfield designed a measure of meaningful information that escapes this paradox.

He called it statistical complexity. Statistical complexity is low when randomness is very

high, but is also low when randomness is very low (regularities). But in the intermediate range,

where there's randomness mixed in with regularity, the statistical complexity is high

[7].

I am not sure if Crutchfield succeeded, but he tries to avoid the paradox.

Proposal

As noted above I propose to call the mathematical Chaitin-Kolmogorov concept of information 'compressibility' in order to prevent confusion of 'mathematical information' with 'human information'. Furthermore, inspired by William Dembski's idea of specification [2], I propose the following. The subset of random strings humans call information, is determined by 'a dictionary of words'. It is really the dictionary that determines the interesting subset we call information. The amount of meaningful information in a string of symbols depends on the amount of matches it has with strings in an established dictionary. This is valid for human language but also for DNA language. Geneticists determine the meaning of a piece of DNA by searching in an established 'gene dictionary' (established from many other species). The rest of the DNA string can be assumed random until a match is found with a dictionary word. This method has recently been demonstrated by a team that used a bigger human genome 'gene-dictionary' (11 in stead of 2 databases) and found 65.000 - 75.000 matches (in stead of 30.000).

Of course there is a relation with the presence of 'dictionary words' and compressibility. But there is no linear relation between compressibility and meaning. My proposal is Dictionary based Information Content: information content based on a dictionary specified in advance. This is not the maximum compressibility that communication engineers and webgraphics designers are after, however it is definitely not subjective or arbitrary, because it easily can be implemented and executed by a computer program and it will capture 'meaning' in a quantitative way. Additionally, it shows that meaning is relative to a dictionary. A French text tested with a Dutch dictionary will result in very low values. The software itself can test which dictionary yields the best result.

The disadvantage of this proposal is that a text containing random but correctly spelled words or even a dictionary itself has the highest score, which is not what we want. A grammar-checker should be added. The more grammar errors the less the information content. But then, a text without spelling or grammar errors can be gibberish because such a 'text' can be produced by a computer program! [this has been done and submitted to a scientific journal]. Worse, a text with some spelling and grammar errors can still be very meaningful.

Conclusion: for detecting meaningful DNA (genes!) in a genome, the dictionary method works fine. The dictionary method works also for separating a string of random letters from a string of words, but it is unable to detect meaningfull texts. Consolation: even humans can fail to detect meaning in correctly spelled texts.

Postcripts

July 2001

I am very honoured that Dr Huen YK, Cah Research Centre, Singapore, has referenced version 1 of this article in his Mathematical Properties of DNA: Algebraic Sequence Analysis, Online Journal of Bioinformatics Volume 1: 42-59, 2001. [this paper has been removed].

See his Brief Comments on junk DNA: is it really junk? (Feb 2002)

Wolfram's rule 30 conflicts with algorithmic information theory

22 June 2002, updated 25 Jan 2004 (10)

Stephen Wolfram describes that simple algorithms (cellular automata)

can produce complex behaviour ("A New Kind of Science", 9).

One algorithm (cellular automata, rule 30) produces a perfect random result.

All statistical tests failed to find deviations from randomness (p.1085) and

claims of others to find regularities are wrong, Wolfram says.

The rule-30 algorithm is deterministic: "if one always ran the cellular automation [rule 30] with the

particular initial conditions, then one would always get exactly the same sequence of 0's and 1's" (p.317).

Wolfram calls this result "intrinsic randomness", because there is no random input to this system.

Now, that is a most remarkable result: a deterministic algorithm produces a truly random sequence.

According to algorithmic information theory any sequence of data that cannot be produced by an algorithm that is shorter than the data itself is 'algorithmically random' (uncompressible).

But at the same time Wolfram states that "any data generated by a simple program can by definition never be algorithmically random" (p.1067). But this clearly conflicts with rule-30,

because the output of his rule-30 algorithm is truly random. (a)

How does Wolfram solve this conflict? (b). I did not find any evidence that Wolfram rejects the notion of algorithmic randomness. On the contrary, he even asserts that by the early 1990s the notion of algorithmic randomness has become generally accepted as the appropriate ultimate definition of randomness." (p.1068) (even though it turns out to be undecidable in general whether any particular sequence is algorithmically random). The only thing he has to say is a very cryptic statement: 'algorithmic randomness cannot be directly relevant to the kind of randomness of cellular automata"! (p.1067). But how could algorithmic information theory be irrelevant for any data set, if it is the ultimate and general theory of randomness? It is amazing that Wolfram remains silent on this crucial point (c).

It seems to me, that rule-30 shows that the notion of algorithmic information content is flawed. Wolfram discusses several data compression methods applied to his cellular automata. Consider for example the asymmetry of generating and compressing strings: on the one hand simple algorithms producing monotone and repetitive patterns and mixed repetitive/random patterns, and on the other hand when data compression techniques are applied to the data sets, they give short descriptions of different lengths.

Monotone and repetitive patterns are strongly compressible and random patterns not, but very similar

simple algorithms generate them all.

The more I think about the very idea of a cellular automata algorithm, the more it seems to invalidate the algorithmic theory of information and complexity. They can be run infinitely long, so the output can grow to an infinite length. Especially rule 30 can produce a complex and non-compressible pattern of an arbitrary size (d). To measure the complexity of such an output by the length of its shortest description is definitely meaningless, because no matter how long the output, the algorithm that produced it stays the same. The rule-30 cellular automata always produces the same output with the same initial conditions (it is deterministic). So it really is a simple description/prescription of that particular output.

Finally, returning to William Dembski (2),

his Law of Conservation of Information is refuted by rule-30, since rule-30 generates information for free! (e)

Comments of a mathematician who published about algorithmic information theory:

- I would tend to agree that algorithmic information theory doesn't capture our intuitive notion of complexity.

But I'd also point out that intuitive notions are often inchoate and inconsistent; making these notions precise often shows how our intuition can be wrong. No one has yet succeeded in replacing algorithmic information theory with a better notion.

- The conflict exists solely in Wolfram's mind. He is equivocating, using two different notions of "random". One is the notion from algorithmic information theory; the other is the vague, fuzzy fact that a handful of statistical tests don't distinguish between the output of rule 30 and a random sequence. But there are MANY examples of deterministic systems that pass many statistical tests, and they have been known for a while. (In fact, these systems are even better than Wolfram's, because you can prove that these systems pass all POLYNOMIAL-TIME statistical tests, if you make a certain complexity-theoretic assumption. See the work of Andrew Yao and Blum-Blum-Shub, for example.) Rules 30 and 110 are only of interest because of their simplicity. No one has proved, for example, that they pass all polynomial-time statistical tests.

- It is only irrelevant in the sense that if one applies algorithmic information to rules like rule 30 and rule 110, one quickly finds they are NOT random in the algorithmic information sense. Thus algorithmic information theory does not capture our "intuitive" notion that rule 110 is "complex". But perhaps this shows our intuition is wrong. Or perhaps it shows that "complex" is a vague and uncapturable notion.

- This is wrong. Rule 30's pattern IS NOT "non-compressible". You can compress it very simply by saying "Rule 30" + description of input + length of output.

- It does not generate information in the algorithmic information sense.

This discussion shows the difficulty of an outsider to assess the value of theoretical claims outside one's own expertise. It seems that algorithmic information theory is a nearly unassailable truth for professional mathematicians. [GK]

|

Letter Serial Correlation

Posted: October 20, 2009

In this section several articles are posted describing a novel method for a statistical analysis of texts. This method is based on analyzing the "letter serial correlation" (LCS) that has been discovered to exist in semantically meaningful texts, regardless of language, authorship, style, etc. It is absent in meaningless strings of letters; applying the LSC text enables one to distinguish between a meaningful message and gibberish even if the text's language is unknown and even if the text's alphabet is not familiar. Thus the statistics in question opens a way for an analysis of texts beyond the limitations of the classic information theory.

|

Truly Random

20 feb 2020

"A truly random number is hard to find. The numbers chosen by people are not random at all, and the pseudorandom numbers created by computers are not very random either. Now scientists have developed a way to generate random numbers from a genuine source of randomness – the unpredictable stage of exactly when, where and how a crystal starts to grow in a solution.

The researchers analysed the numbers generated from crystals grown in three solutions and found that they all passed statistical tests for the quality of their randomness."

Tom Metcalfe Really random numbers created from crystals, Chemistry World, 18 February 2020. (free access)

|

Notes:

- Letter to the New Scientist by Gert Korthof (9 Oct 1999) and a reply to my letter to the New Scientist (30 Oct 1999).

- On the origin of information by means of intelligent design a review by Gert Korthof.

- Richard Feynman (1999) Feyman Lectures on Computation, p120.

- Ian Stewart (1998) Life's other secret, p239.

- Claude Shannon and Warren Weaver (1949): The Mathematical Theory of Communication.

- Paul Davies (1999) The Fifth Miracle, p119.

- Tom Siegfried (2000), The Bit and the Pendulum, chapter 8, p169-171.

- A pseudo-random string generated by a computerprogram. A true

random string is generated by a natural process such as radioactive decay.

See for explanation site of Mads Haahr and

Genuine random numbers, generated by radioactive decay.

- Stephen Wolfram (2002) A New Kind of Science, p28, p1085. There is a critical letter to Nature 418,

17 (04 July 2002) by Michael Phillips Beautiful vistas, but is this really science?, explaining that there is

no link with reality. There is a (positive) review of the book by John L Casti "Science is a computer program",

Nature, 417, 381-382 (23 May 2002). There is a news feature article by Jim Giles (assistant news and features editor): "Stephen Wolfram: What kind of science is this?", Nature, 417, 216-218 26 May 2002.

- I thank Otto B. Wiersma and Jeffrey Shallit for discussion.

- John Maynard Smith, Eörs Szathmáry (1999) The Origins of Life. From the Birth of Life to the Origin of Language, p.11.

- Hubert Yockey (1992) Information theory and molecular biology. See review on this site.

- Radu Popa (2004) Between Necessity and Probability: Searching for the Definition and Origin of Life, p.95

- The amount of noise added in both cases is 400% (maximum) and has a Gaussian distribution (Photoshop).

Each resulting figure is unique and has different information content (file size). Only one is given.

The jpeg files do not have maximal compression, so file sizes are not equal to information content.

Further Reading:

- Christophe Menant Introduction to a Systemic Theory of Meaning. [ 14 Nov 2004 ]

- NewScientist.com news service 17 August 04: Prize draw uses hear for random numbers. "Intel's random number generating chip uses thermal noise from transistors as its source of randomness".

- Ricardo B.R. Azevedo et al (2005) "The simplicity of metazoan cell lineages", Nature, 433, 152-156 (13 Jan 2005) define the algorithmic complexity of a cell linage as the length of the shortest description of the lineage based on its constituents sublineages. According to this definition the complexity the embryonic developmental process is simpler than would be expected by chance. This is a very interesting example of the application of Kolmogorov complexity to biology. [ 13 Jan 2005 ]

- Thomas D. Schneider wrote to me: Resolution to your information paradoxes [14 May 2005]

"Gert:

Information Content, Compressibility and Meaning (this page)

You are right, there is a problem with the way most people present information measures. But if you go back to Shannon and read his work *carefully* you will see that *in the presence of noise* one must take the information measure as a difference of uncertanties.

I have written up this pitfall at: http://www.lecb.ncifcrf.gov/~toms/pitfalls.html

My viewpoint is that 'algorithmic complexity' is a useless concept, especially since nobody can actually give you measures of the thing!

Shannon's measure works and can be used to evaluate information in living things. You can explore my entire web site but I think that you will find my paper on the evolution of information to be the most interesting:

T. D. Schneider (2000), Evolution of Biological Information, Nucleic Acids Res, Second issue in the month, July 15, release date July 10, 28,14, pp2794-2799, 2000.

|

- See more about information and meaning "What is information?" (pp.92-104) in Computer Viruses, Artificial Life and Evolution by Mark A. Ludwig (review).

- Gregory Chaitin (2005) Meta Math! The Quest for Omega, Pantheon Books, 223 pages. Review: Nature, 439, 16 Feb 06 pp790-791.

- Gregory Chaitin (2006) "The Limits of Reason", Scientific American, Mar 2006. "Ideas on complexity and randomness originally suggested by Gottfired W. Leibniz in 1686, combined with modern information theory, imply that there can never be a "theory of everything" for all of mathematics."

- Robert M. Hazen, Patrick L. Griffin, James M. Carothers, and Jack W. Szostak (2007) Functional information and the emergence of biocomplexity, PNAS, published online May 9, 2007;

"It has been difficult to define complexity in terms of a metric that applies to all complex systems."

"Despite this diversity, a common thread is present: All complex systems alter their environments in one or more ways, which we refer to as functions." "Function is thus the essence of complex systems".

"Here we explore the functional information of randomly generated populations of Avida organisms." Gene reductionism is prevented: an Avida genome consist of multiple lines of machine instructions. "None of these computational tasks can be performed by the execution of a single instruction; indeed, the shortest functional program requires five instructions. The computational ability (function) of Avida organisms

thus emerges from the interaction of instructions".

- Valerio Scarani (2010) 'Information science: Guaranteed randomness', News and Views. Nature 464, 988-989 (15 April 2010)

"You have received a device that is claimed to produce random numbers, but you don't trust it. Can you check it without opening it? In some cases, you can, thanks to the bizarre nature of quantum physics."

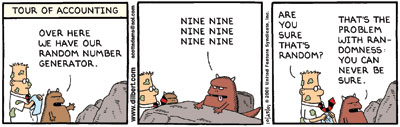

"Starting with the comic strip, Dilbert's guide is uttering a scientific truth: the list 9, 9, 9, 9, 9, 9 is as valid an output of a generator of random numbers as is 1, 2, 3, 4, 5, 6 or 4, 6, 7, 1, 3, 8. In fact, in a long enough sequence of lists, any list of numbers should appear with the same frequency. Classic, 'black-box', tests of randomness exploit this idea: they check for the relative frequencies of lists. But, in practice, no such test can distinguish a sequence generated by a truly random process from one generated by a suitable deterministic algorithm that repeats itself after, for example, 1023 numbers. Moreover, in this way, whether the numbers are private cannot be checked: even if the sequence had initially been generated by a truly random process, it could have been copied several times, and the random generator may just be reading from a record."

- S. Pironio et al (2010) Random numbers certified by Bell's theorem', Nature, 15 Apr 2010.

"The characterization of true randomness is elusive. There exist statistical tests used to verify the absence of certain patterns in a stream of numbers, but no finite set of tests can ever be considered complete, as there may be patterns not covered by such tests. For example, certain pseudo-random number generators are deterministic in nature, yet produce results that satisfy all the randomness tests. At a more fundamental level, there is no such thing as true randomness in the classical world: any classical system admits in principle a deterministic description and thus appears random to us as a consequence of a lack of knowledge about its fundamental description."

- Dual_EC_DRBG: Dual_EC_DRBG is a pseudorandom number generator that was promoted as a cryptographically secure pseudorandom number generator (CSPRNG) by the National Institute of Standards and Technology. "the random-number generator, the 'Dual EC DRBG' standard, had been hacked by the NSA so that its output would not be as random as it should have been." Nature, 19 Dec 2013

- Commericial Random Number Generation: Quantis is a family of hardware random number generators (RNG) which exploit elementary quantum optical processes as a source of true randomness. [added: 20 sep 2017]

|